By adaptive - July 1st, 2014

Facebook’s emotional contagion experiment again highlights the need for corporations to pay close attention to how they manipulate personal data

Now that the furore has died down a little since Facebook released its research into ‘emotional contagion’ looking at the research and its consequences for all corporations engaged in social media communications, it is clear that undertaking this kind of research is at best ethically suspect and at worst potentially breaks a number of privacy regulations.

Facebook publicly stated: “The reason we did this research is because we care about the emotional impact of Facebook and the people that use our product. We felt that it was important to investigate the common worry that seeing friends post positive content leads to people feeling negative or left out. At the same time, we were concerned that exposure to friends' negativity might lead people to avoid visiting Facebook. We didn't clearly state our motivations in the paper.

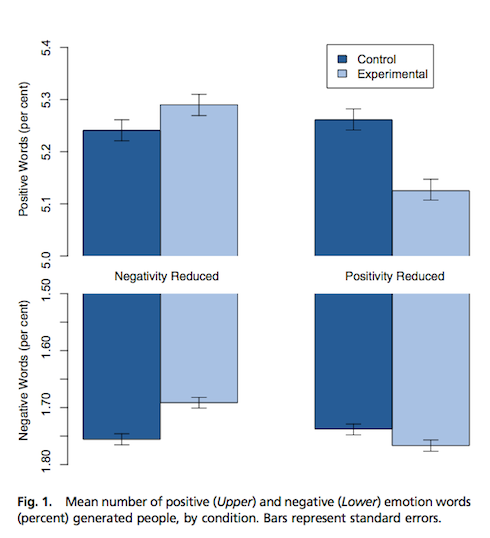

“Regarding methodology, our research sought to investigate the above claim by very minimally deprioritizing a small percentage of content in News Feed (based on whether there was an emotional word in the post) for a group of people (about 0.04% of users, or 1 in 2500) for a short period (one week, in early 2012). Nobody's posts were "hidden," they just didn't show up on some loads of Feed. Those posts were always visible on friends' timelines, and could have shown up on subsequent News Feed loads. And we found the exact opposite to what was then the conventional wisdom: Seeing a certain kind of emotion (positive) encourages it rather than suppresses it.

“The goal of all of our research at Facebook is to learn how to provide a better service. Having written and designed this experiment myself, I can tell you that our goal was never to upset anyone. I can understand why some people have concerns about it, and my co-authors and I are very sorry for the way the paper described the research and any anxiety it caused. In hindsight, the research benefits of the paper may not have justified all of this anxiety.”

That is all well and good, but many – including members of the Commons media select committee – have expressed concern including Jim Sheridan, a member of the Committee, who told the Guardian: "This is extraordinarily powerful stuff and if there is not already legislation on this, then there should be to protect people," he said. "They are manipulating material from people's personal lives and I am worried about the ability of Facebook and others to manipulate people's thoughts in politics or other areas. If people are being thought-controlled in this kind of way there needs to be protection and they at least need to know about it.”

Companies like Facebook perform these kinds of studies constantly in an effort to develop a deeper understanding of their users. The research not surprisingly concluded that: “Emotional states can be transferred to others via emotional contagion, leading people to experience the same emotions without their awareness. Emotional contagion is well established in laboratory experiments, with people transferring positive and negative emotions to others.”

What is clear from the fallout Facebook’s research has generated is that corporations that undertake any similar research need to take care and clearly understand their legal foundations. Facebook rests on ‘informed consent’ that is embedded in its terms and conditions. Whether this will be sufficient in the future remains to be seen.

Users of social media however, will react negatively if their data is manipulated in any way that seems to be out of their control. Indeed, research from TRUSTe concluded: “The potential impact of this concern over business privacy practices is significant as consumer trust is falling. Just over half of US Internet users (55%, down from 57% in 2013) say they trust businesses with their personal information online. Furthermore, 89% say they avoid companies they do not trust to protect their privacy, the same as in January 2013. 70% said they felt more confident that they knew how to manage their privacy than one year ago, but this can cause consumers to take actions, which negatively impact businesses.”

Of course, A/B testing has been used for years, which is essentially a psychological experiment to determine which webpage performs the best. It’s the manipulation of personal data by Facebook that has caused the outrage.

With the Information Commissioner looking at the Facebook action for any potential breach of the Data Protection Act, brands should think carefully and ensure they are working with the full approval of their social media users before embarking on any research that could be defined – or at least perceived – as being clandestine in any way.

Image Source: Freedigitalphotos.net